52896WA Advanced Diploma of Civil and Structural Engineering (Materials Testing)

Investigation of the properties of construction materials, the principles which…Read moreGraduate Diploma of Engineering (Safety, Risk and Reliability)

The Graduate Diploma of Engineering (Safety, Risk and Reliability) program…Read moreProfessional Certificate of Competency in Fundamentals of Electric Vehicles

Learn the fundamentals of building an electric vehicle, the components…Read moreProfessional Certificate of Competency in 5G Technology and Services

Learn 5G network applications and uses, network overview and new…Read moreProfessional Certificate of Competency in Clean Fuel Technology - Ultra Low Sulphur Fuels

Learn the fundamentals of Clean Fuel Technology - Ultra Low…Read moreProfessional Certificate of Competency in Battery Energy Storage and Applications

Through a scientific and practical approach, the Battery Energy Storage…Read more52910WA Graduate Certificate in Hydrogen Engineering and Management

Hydrogen has become a significant player in energy production and…Read moreProfessional Certificate of Competency in Hydrogen Powered Vehicles

This course is designed for engineers and professionals who are…Read more

If you are a non-chemical engineer this manual will provide you with the fundamentals in this area and enable you to confidently talk to and work effectively with chemical engineers and process equipment.

Revision 8

Vivek Mehra, BSc, MBA

Website: www.idc-online.com

E-mail: idc@idc-online.com

IDC Technologies Pty Ltd

PO Box 1093, West Perth, Western Australia 6872

Offices in Australia, New Zealand, Singapore, United Kingdom, Ireland, Malaysia, Poland, United States of America, Canada, South Africa and India

Copyright © IDC Technologies 2011. All rights reserved.

First published 2005

All rights to this publication, associated software and workshop are reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means electronic, mechanical, photocopying, recording or otherwise without the prior written permission of the publisher. All enquiries should be made to the publisher at the address above.

Disclaimer

Whilst all reasonable care has been taken to ensure that the descriptions, opinions, programs, listings, software and diagrams are accurate and workable, IDC Technologies do not accept any legal responsibility or liability to any person, organization or other entity for any direct loss, consequential loss or damage, however caused, that may be suffered as a result of the use of this publication or the associated workshop and software.

In case of any uncertainty, we recommend that you contact IDC Technologies for clarification or assistance.

Trademarks

All logos and trademarks belong to, and are copyrighted to, their companies respectively.

Acknowledgements

IDC Technologies expresses its sincere thanks to all those engineers and technicians on our training workshops who freely made available their expertise in preparing this manual.

Table of Contents

1 Chemical engineering- an overview 1

1.1 Basics of chemical engineering 1

1.2 Unit operations 2

1.3 Thermodynamics 8

1.4 Chemical Kinetics 9

1.5 Chemical engineer – scope & responsibilities 9

1.6 “Ten greatest achievements” of chemical engineering 15

1.7 Chemical engineering “Today & Tomorrow” 17

2 Stoichiometry 19

2.1 Introduction 19

2.2 Understanding chemical formulas and equations 19

2.3 Balancing chemical equations 25

2.4 Chemical periodicity 27

2.5 Molecular weight 28

2.6 The mole and molar mass 29

2.7 Percent composition 29

2.8 Introduction to solutions 32

2.9 Units and dimensions 33

2.10 Process variables 36

3 Chemical kinetics 41

3.1 Chemical reactions – Basic concepts 41

3.2 Classification of chemical reactions 42

3.3 Chemical reaction profile 43

3.4 Classification of reactors 44

3.5 Catalysts 49

3.6 Promoters 53

3.7 Efficiency criteria of a chemical process 55

4 Fluid mechanics 57

4.1 Introduction 57

4.2 Volumetric properties of liquids 57

4.3 Liquid-column manometers 58

4.4 Mechanical pressure gauges 61

4.5 Measurement of fluid flow 65

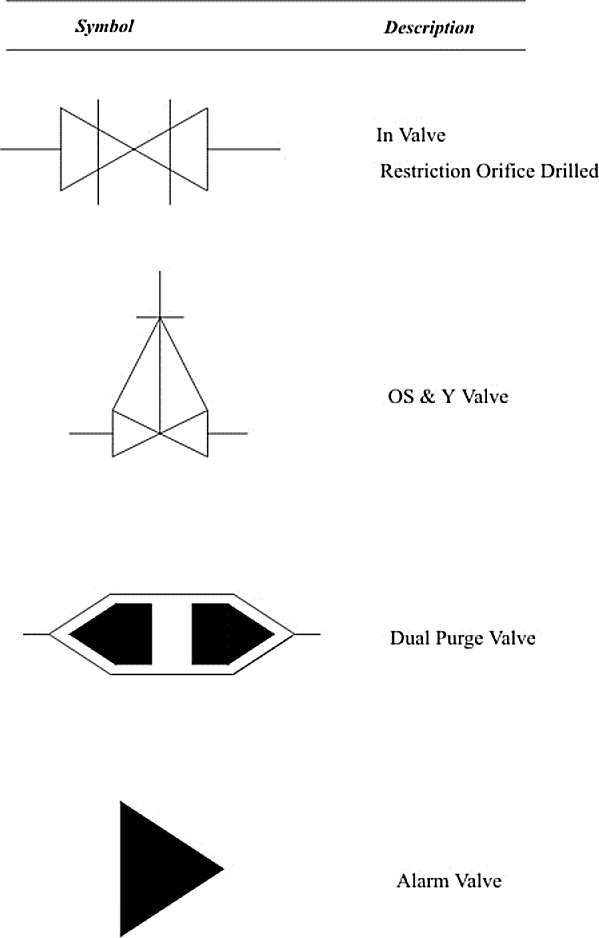

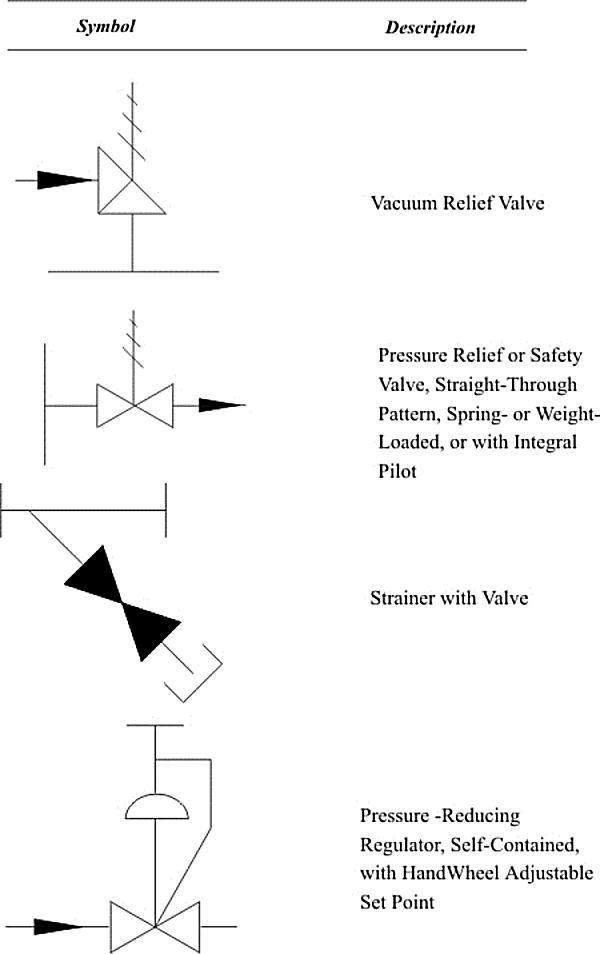

4.6 Valves 82

4.7 Fluid moving machinery 90

4.8 Centrifugal pumps 91

4.9 Positive-displacement pumps 92

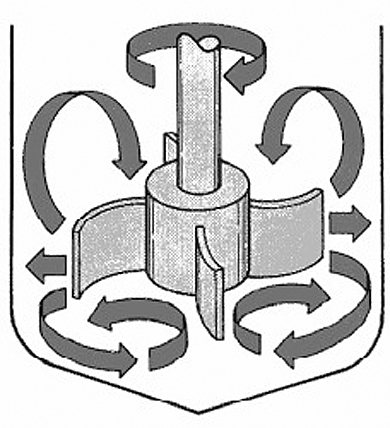

4.10 Agitation equipments 96

5 Heat transfer and its applications 101

5.1 Heat transfer mechanism 101

5.2 Heat exchangers 101

5.3 Boilers 111

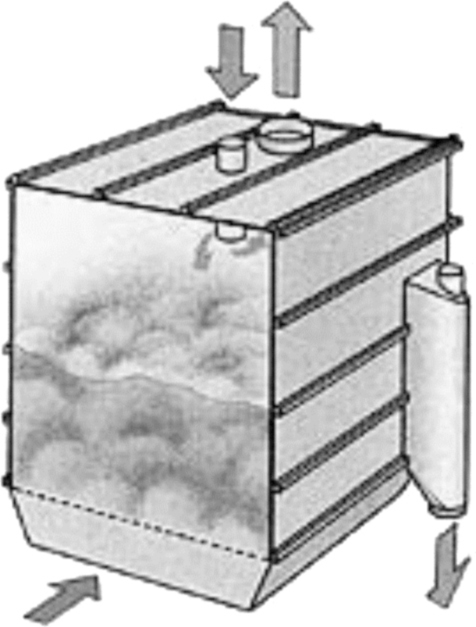

5.4 Evaporators 115

6 Mass transfer and its applications 125

6.1 Mass transfer phenomena 125

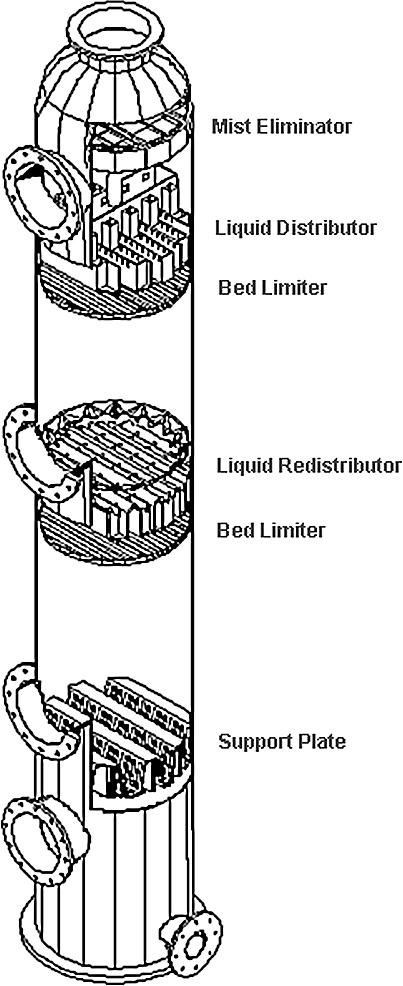

6.2 Distillation 125

6.3 Types of distillation columns 127

6.4 Column internals 128

6.5 The types of distillation 137

6.6 Sublimation 143

6.7 Leaching 143

6.8 Centrifugal extractors 148

6.9 Gas absorption 149

6.10 Cooling towers 152

6.11 Desiccant dehumidifiers 157

6.12 Adsorptions systems 159

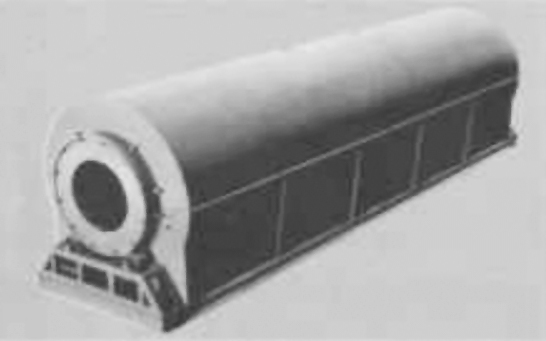

6.13 Drying 163

6.14 Drying equipment 164

7 Thermodynamics 183

7.1 Applications of thermodynamics principles 183

7.2 Compressor 187

7.3 Ejector-system 194

7.4 Heat conversion & power cycles 195

7.5 Refrigeration and liquefaction 211

8 Process design 217

8.1 Introduction 217

8.2 Process design considerations 218

8.3 Equipment design factors 218

8.4 A look at common industrial chemicals 219

8.5 Materials of construction 221

8.6 Types of corrosion 222

8.7 Linings for chemical plants and equipment 223

8.8 Rules of thumb 224

9 Process control 231

9.1 Overview 231

9.2 Control system 231

9.3 Practical control examples 234

9.4 Control actions 240

9.5 Examples of control 241

9.6 Control loop diagrams 248

9.7 Modes of automatic control 249

10 Chemical process safety 259

10.1 Safety responsibilities 259

10.2 Standard safety rules and regulations 261

10.3 Chemical hazards and chemical safety data sheets 262

10.4 General safety practices 266

10.5 Good housekeeping plan 276

10.6 Personal protective equipments 276

11 Classification of process diagrams/data sheets and their application 277

11.1 Introduction 277

11.2 Types of process drawings 277

11.3 Block Flow Diagram (BFD) 277

11.4 Process Flow Diagram (PFD) 278

11.5 Piping and Instrument Diagram (P&ID) 279

11.6 Utility Flow Diagram (UFD) 280

11.7 Data sheets 280

12 Unit operations of particulate solids 283

12.1 Storage of solids 282

12.2 Feeders 286

12.3 Crushers and mills 288

12.4 Cutting machines 295

12.5 Crystallization 296

12.6 Mixers 298

12.7 Mechanical separation 301

12.8 Powder compacting equipments 306

12.9 Filtration 310

12.10 Cryogenic grinding 314

12.11 Blending 315

13 Process economics 317

13.1 Capital investment 317

13.2 Total product costs 319

13.3 Economic analysis 320

13.4 Life cycle analysis 321

13.5 Real-Time Optimization (RTO) of chemical processes 322

Appendix A Periodic Table 325

Appendix B Fundamental physical constants 327

Appendix C Process equipment symbols 331

Appendix D Typical instrumentation representation 349

Appendix E Typical P&ID 375

Appendix F Typical process data sheet 377

Appendix G Some websites for safety information 379

Appendix H Practical exercises 383

1.1 Basics of chemical engineering

Chemical engineering is associated with:

- Efficient and economic control of chemical processes

- Design of chemical reactors and process plants

- Development of sustainable products

- Pollution control and treatment of industrial wastes

Man has utilized chemicals for a long time but chemical engineering was recognized as a separate field only a century ago. Egyptians developed certain types of papers as early as 2000 BC and glass is presumed to have been invented close to 5000 BC. Perhaps the single most important pursuit in chemistry was the ‘manufacture’ of gold. As soon as man discovered this metal he became obsessed with it. No civilization could resist the shine of this precious metal. In the middle ages a set of ‘mad-hatters’ decided to live their dream of converting base metals to gold. The conversion became the ‘Holy Grail’ of those pursuing it. Some of the greatest discoveries in physical chemistry were made by these people even though the ‘Grail’ still remains elusive. Today their work is recognized as a pioneering effort and the first standardization of manufacturing techniques which ultimately gave us the field of Chemical Engineering. These scientists are collectively referred to as the Alchemists.

But like all other engineering disciplines, chemical engineering ultimately got recognized as a major field of engineering in the 19th century. During this period of industrial revolution the requirement for engineers who could design chemical reactors and plants was greatly enhanced because of the increase in the use of chemicals in every day life. This was a time when the chemical industry grew rapidly and it needed experts to handle chemical plants and their design. Up till 1910, the chemical industry had to rely mainly on mechanical engineers and chemists.

Typical chemical process plant

However, due to emerging methods and techniques, chemical processing was becoming too complex, and it called for the training of engineers in the field of chemical processing. The design of chemical reactors and other equipment involved in a chemical process plant was beyond the scope for chemists and mechanical engineers. Keeping all these factors in view, the start of a new engineering discipline for chemicals was seriously considered.

As a result, chemical engineering emerged as a separate discipline in 1910 when professors at Massachusetts Institute of Technology (MIT) realized that neither mechanical engineering nor chemistry offered sound approaches to a chemical plant’s design. So a new branch of engineering was started to prepare engineers specializing in the design, operation, and construction of chemical processing plants. Subsequently, this field got universal recognition and many institutions throughout the globe started teaching this subject. Today, thousands of chemical engineers are working around the globe and scores of young men and women are being trained.

1.2 Unit operations

Processing and manufacturing of chemicals in industries is based on many operations such as heat transfer, mass transfer, fluid flow, distillation, evaporation, absorption, drying, leaching, mixing, crystallization, adsorption, and humidification.

The idea of treating these processes of the chemical industry as unit operations was also put forward by the professors of the MIT. They characterized the physical operations necessary for manufacturing chemicals as unit operations. Although originally largely descriptive, these unit operations have been the object of vigorous study and now can be used with sound mathematical procedures for plant design predictions.

During 1930, P. H. Groggins proposed a similar approach to classifying chemical operations as unit processes. Such processes include nitration, oxidation, hydrogenation, sulphonation, chlorination, and esterification. Development of a lab-scale process, designed by a chemist, into a large-scale industrial process is a difficult task and requires the knowledge of the chemicals as well as the mechanical aspects of the equipment required.

The physical operations necessary for manufacturing chemicals are called unit operations. It is a method of organizing much of the subject matter of chemical engineering. Unit operations can be, no doubt, called the heart of chemical engineering. The unit operations concept is based on the fact that by systematically studying the operations (such as heat transfer, mass transfer, fluid flow, drying, distillation, evaporation, absorption, extraction, and mixing) involved in the chemical industry, the treatment of all processes is unified and simplified. The unit operations are largely used to conduct the primary physical steps of preparing the reactants, separating and purifying the products, recycling unconverted reactants, and controlling the energy transfer into or out of the chemical reactors. The design of the equipment involved for these operations is also studied in unit operations. Because of the complexity and variety of the modern chemical manufacturing processes the need for arranging the different processes systematically has become undeniable. The chemical steps themselves are conducted by controlling the flow of material and energy to and from the reaction zone.

1.2.1 Fluid mechanics

The branch of engineering that investigates the behavior of fluids is called fluid mechanics. It is a part of a larger branch of engineering called continuum mechanics, which deals with the behavior of fluids as well as stressed solids. A fluid is a substance that does not permanently resist distortion. An attempt to alter the shape of a mass of fluid results in layers of fluids sliding over one another until a new shape is attained. During the change in shape, shear stresses exist depending upon the viscosity of the fluid and the rate of sliding, but when the final shape is achieved all the shear stresses disappear. A fluid in equilibrium is free from shear stresses.

Fluids may be compressible or incompressible. If the density of a fluid changes slightly with the changes in temperature and pressure then such a fluid is called incompressible and if the density changes significantly then such a fluid is said to be compressible. Gases are generally considered to be compressible while liquids are considered incompressible.

The behavior of fluids is very important in chemical engineering. It is a major part of unit operations principle. Understanding of fluids is essential not only for accurately treating problems in the movement of fluids through pipes, compressors, pumps, and all kinds of process equipment but also for the study of heat flow and many separation principles that depend on diffusion and mass transfer. Design and study of measuring devices (such as flow meters, area meters, pressure gauges), transportation equipment (such as compressors and pumps), and mixing and agitation equipment (such as mixers and agitators) are considered in fluid mechanics.

Fluid mechanics can be divided into two branches:

- Fluid statics

- Fluid dynamics

Fluid statics deals with the fluids at rest or in equilibrium state with no shear stress. It is concerned with the properties and behavior of fluids. In the case of liquids this subject is called hydrostatics and in the case of gases it is called pneumatics.

Fluid dynamics, also called fluid flow, deals with the flowing fluids or with fluids when section of the fluid is in motion relative to the other parts. The flow of a fluid is of two types:

- Laminar flow: The flow in which the layers of the fluid are flowing parallel to the axis of the pipe or conduit

- Turbulent flow: The flow in which the layers of the moving fluids are not parallel to the axis of the pipe and the fluid is disturbed from point to point

Chemical engineers are continuously involved with the flow of fluids. In industrial applications, they have to transport fluids from one point to another through pipes or open ducts which require the determination of pressure drops in the system, selection of a proper type of pump or compressor, power required for pumping or compression, and measurement of flow rates. All these aspects are studied in fluid flow. A major portion of fluid flow deals with the transportation, metering, and mixing & agitation of fluids.

1.2.2 Heat transfer

Heat transfer is the branch of engineering that deals with the rates of heat exchange between hot and cold bodies. The driving force for heat transfer is the temperature difference per unit area or temperature gradient. In a majority of chemical processes heat is either given out or absorbed. Most of the times the fluids must be heated or cooled in a variety of equipment such as boilers, heaters, condensers, furnaces, dryers, evaporators, and reactors. In all of these cases the fundamental problem encountered is the transferring of heat at the desired rate. Some times it is necessary to prevent the loss of heat from vessels or pipes.

The control of flow of heat at the desired rate is one of the most important areas of chemical engineering. The principles and laws governing the rates of heat flow are studied under the heading of heat transfer. Even though the transfer of heat is involved in every unit operation, in evaporation, drying, and combustion the primary concern is the transfer of heat rather than the transfer of mass and these operations are governed by the rate of heat transfer. Laws and equations of heat transfer are used for the designing of the equipment required for these processes.

Evaporation is the process used to concentrate a solution consisting of a non-volatile solute and volatile solvent. In a majority of evaporations the solvent is water.

Drying is the removal of relatively small amounts of water or other liquid from the solid material to reduce the content of residual liquid to a low value.

Heat transfer can take place through the following three modes of (heat) transfer:

- Conduction

- Convection

- Radiation

However, most of the processes are a combination of two or more modes of heat transfer.

Conduction

Conduction is the transfer of heat through fixed material such as stationary walls. In a solid, the flow of heat is the result of the transfer of vibrational energy from one molecule to another, and in fluids it occurs in addition as a result of the transfer of kinetic energy. Heat transfer through conduction may also arise from the movement of free electrons.

Convection

Convection is the heat transfer occurring due to the mixing of relatively hot and cold portions of a fluid. If this mixing takes place due to density differences, then such a process is called natural or free convection, e.g. a pool of liquid heated from below. However, if the mixing takes place due to eddies produced by mechanical agitation then such a process is called forced convection. It is important to note that convection requires mixing of fluid elements and is not governed by just the temperature difference as in conduction and radiation.

Radiation

Radiation is the transfer of radiant energy from one body to another. All materials radiate thermal energy in the form of electromagnetic waves. When radiation falls on a second body it may be partially reflected, transmitted, or absorbed. It is only the fraction that is absorbed that appears as heat in the body. While heat transfer deals with the transfer of heat between hot and cold bodies independently, Process heat transfer deals with the rates of heat exchange as they occur in the heat-transfer equipment of the engineering and chemical processes.

1.2.3 Mass transfer

Mass transfer is involved with the transfer of a component in a mixture from a region in which it has a high concentration to a region in which its concentration is lower. This process can occur in a gas, liquid, or vapor. It can result from the random velocities of the molecules (molecular diffusion) or from the circulating or eddy currents present in a turbulent fluid (eddy diffusion). Like temperature gradient is the driving force for heat transfer, the driving force for mass transfer is the concentration gradient. Many unit operations such as distillation, absorption, extraction, leaching, membrane separation, dehumidification, crystallization, ion exchange, and adsorption are considered as mass transfer operations. Even though transfer of heat is also involved in these operations but the rate of mass transfer governs the rate phenomena in these processes. Unlike purely mechanical separation processes, which utilize density difference and particle size, these methods make use of differences in vapor pressure, solubility, or diffusivity.

Distillation

The function is to separate, by vaporization, a liquid mixture of miscible and volatile substances into individual components or, in some cases into groups of components.

Absorption

In absorption a soluble vapor is absorbed by means of a liquid in which the solute gas is more or less soluble, from its mixture with an inert gas. The solute is subsequently recovered from the liquid by distillation, and the absorbing liquid can either be discarded or reused.

Desorption

When a solute is transferred from the solvent liquid to the gas phase, the operation is known as stripping or desorption.

Dehumidification

A pure liquid is partially removed from an inert or carrier gas by condensation. Usually the carrier gas is virtually insoluble in the liquid.

Membrane separations

In membrane separations, including gas separations, reverse osmosis, and ultra filtration, one component of a liquid or gaseous mixture passes through a selective membrane more readily than the other components.

Adsorption

In adsorption a solute is removed from either a liquid or a gas through contact with a solid adsorbent, the surface of which has a special affinity for the solute.

Liquid extraction

Also called solvent extraction, a mixture of two components is treated by a solvent that preferentially dissolves one or more of the components in the mixture. The mixture so treated is called the raffinate and the solvent-rich phase is called extract. In extraction of solids, or leaching, soluble material is dissolved from its mixture from an inert solid by means of a liquid solvent.

Crystallization

This process is used to obtain materials in attractive and uniform crystals of good purity, separating a solute from a melt or a solution and leaving impurities behind.

1.2.4 Solid particulate operations

Also termed as particle technology, this branch of unit operations deals with solid handling and is mainly concerned with the mixing, size reduction, and mechanical separation of solids. Solids in general are more difficult to handle than fluids. In processing solids appear in a variety of forms such as angular pieces, continuous sheets, finely divided powders. They may be hard and abrasive, tough and rubbery, soft or fragile, dusty, cohesive, free flowing, or sticky. Whatever their form, means must be found to handle these solids.

Mixing of solids resembles to some extent with the mixing of low-viscosity liquids, however, mixing of solids requires much more power. In mixing two or more separate components are intermingled to obtain a uniform product. Some of the mixers and blenders used for liquids are also used for solids. Solid mixers mainly used are kneaders, dispersers, masticators, mixer-extruders, mixing rolls, pug mills, ribbon blenders, screw mixers, tumbling mixers, and impact wheel.

Size reduction

Size reduction, referred to as communition, is a term applied to the methods and ways used to cut or breakdown solid particles in smaller pieces.

Reduction of particle size is usually carried in four ways:

- Compression

- Impact

- Attrition or rubbing

- Cutting

Separations

Separations can be classified into two classes:

- Diffusional operationsInvolves the transfer of material between phases e.g. absorption, distillation, adsorption etc

- Mechanical separationsIt is used for heterogeneous mixtures. This class of separation processes consists of techniques based on physical differences between the particles such as size, shape, or density. They are applicable to separating solids from gases, liquid drops from gases, solids from solids, and solids from liquids

Two general methods are:

- The use of a sieve, or membrane such as a screen or a filter, which retains one component and allows the other to pass

- The utilization of differences in the rate of sedimentation of particles or drops as they move through a liquid or a gas. The operations included in mechanical separations are screening, filtration, and gravity and centrifugal settling

Screening

Screening is a method of separating particles according to size alone. In industrial screening the solids are dropped or thrown against a screening surface. The undersize (also called fines) pass through the screen openings leaving behind oversize (also called tails) particles. Industrial screens are made from woven wire, silk, plastic cloth, metal, and perforated or slotted metal plates. Stationary screens, grizzlies, gyrating screens, vibrating screens, and centrifugal sifters are used for this purpose.

Filtration

Filtration is the separation of solid particles from a fluid by passing the fluid through a filtering medium through which the solids are deposited. Industrial filtrations range from simple straining to highly complex separations. The fluid may be a liquid or a gas; the valuable stream from the filter may be fluid, solid, or both.

Filters are divided into three main groups:

- Cake filters separate relatively large amounts of solids as a cake of crystals or sludge. Filter press, shell and leaf filter, belt filter, rotary drum filter, batch centrifuge filters are used for this purpose

- Clarifying filters remove small amounts of solids to produce a clean gas or a sparkling clear liquid by trapping the solid particles inside the filter medium. Gravity bed filters, cartridge filters, edge filters, tank filters, pad filters, bag filters, and granular bed filters are used for this purpose

- Cross-flow filters are used for very fine particles or for micro-filtration. In a cross-flow filter the feed suspension flows under pressure at a fairly high velocity across the filter medium. Some of the liquid passes through the medium as a clear filtrate, leaving behind a more concentrated suspension. For these filters different types of membranes are used

Settling processes

Settling processes are used for mechanical separations, utilizing the movement of solid particles or liquid drops through a fluid.

Gravity settling processes are based on the fact that particles heavier than the suspended fluid can be removed from a gas or liquid in a large settling tank in which the fluid velocity is low and the particles are allowed a sufficient time to settle out.

Gravity settling process is of two types:

- Clarifier: A settler that removes virtually all the particles from a liquid

- Classifier: A device that separates the solid into two fractions

Centrifugal settling processes are efficient than gravity settling processes. A given particle in a given fluid settles under gravitational force at a fixed maximum rate. To increase the settling rate the force of gravity acting on the particle may be replaced by a much stronger centrifugal force. Centrifugal separators, to certain extent, have replaced the gravity separators because of their greater effectiveness with fine drops and particles and their much smaller size for a given capacity. The most widely used type of centrifugal separators is the cyclone separator. Other types mostly used are centrifugal decanters, tubular centrifuges, disk centrifuge, nozzle discharge centrifuge, and centrifugal classifiers.

1.3 Thermodynamics

The transformation of heat energy into some other form of energy or the transformation of some other form of energy into heat energy is called thermodynamics. Thermodynamics is a very useful branch of engineering science and is very helpful in the treatment of such processes as refrigeration, flashing, and the development of boilers and steam and gas turbines. Thermodynamics is governed by two rules called the first and second law of thermodynamics.

First law of thermodynamics

First law of thermodynamics states that Energy can neither be created nor destroyed, however it can be transferred from one form to another. This law is also known as law of conservation of energy. In thermodynamic sense, heat and work refer to energy in transit across the boundary between the system and surroundings. These forms of energy can never be stored. Energy is stored in its potential, kinetic, and internal forms. These forms reside with material objects and exist because of position, configuration, and motion of matter.

Second law of thermodynamics

The second law of thermodynamics states that it is impossible to transfer heat from a cold body to a hot body unless external work is done on the system. Or, no heat engine operating continuously in a cycle can extract heat from a hot reservoir and convert it into useful work without having a sink. The universal applicability of this science is shown by the fact that physicists, chemists, and engineers employ it. In each case the basic principles are the same but the applications differ.

Thermodynamics enables a chemical engineer to cope with a wide variety of problems. Among the most important of these are the determinations of heat and work requirements for many physical and chemical processes, and the determination of equilibrium conditions for chemical reactions and for the transfer of chemical species between phases.

1.4 Chemical kinetics

This branch is a very important activity in chemical engineering. It is primarily concerned with the exploitation of chemical reactions on a commercial scale. Its goal is the successful design and operation of chemical reactors. This activity, probably more than any other, sets chemical engineering apart as a distinct branch of engineering profession.

Design of equipment for the physical treatment steps is studied in the unit operations. The chemical treatment step of a process is studied in chemical reaction engineering. The treatment stages are the heart of a process and the core factor that makes or breaks the process economically. Reactor design uses information, knowledge, and experience from a variety of areas such as thermodynamics, chemical kinetics, fluid mechanics, heat transfer, mass transfer, and economics.

1.5 Chemical engineer – scope & responsibilities

Chemical engineers are employed in many process industries representing a diverse range of products, employers, and services. The chemical engineering profession includes a wide variety of activities in a number of institutions including industry, research, government, and academia. Chemical engineering mainly deals with industrial processes in which raw materials are changed into useful products. The chemical engineers develop, design, and engineer both complete processes and the equipment used; choose the proper raw material; operate the plants efficiently, safely, and economically; and see to it that the products meet the requirements set by the customers.

Chemical engineering is both an art and a science. Chemical engineers work in numerous areas besides petroleum refining and the petrochemical industries because their background and experience are easily portable and found useful. Products of concern to chemical engineers range from commodity chemicals like sulphuric acid and chlorine to high-tech items like lithographic support for electronic industry (Silicon chips, microprocessors) and genetically modified biochemical agents. The number of chemical engineers working throughout the world is enormous. These engineers are employed by both private and public enterprises. They work in a variety of fields besides process and designing. The wide spectrum of application of chemical engineers shows that chemical engineers must be trained to function in any phase of chemical manufacturing. A chemical engineer during his career performs various activities. From plant design to successful plant operation he has to face many tasks and challenges. To have a better understanding of the work of a chemical engineer let us consider the important activities undertaken by him

1.5.1 Process selection

The selection of a process is one of the most important and time-consuming activities undertaken by a chemical engineer. One process may be energy efficient than the other but the other may be less polluting or may have its raw materials readily available. Selecting a process out from the available options is no easy job because each process has certain advantages and disadvantages. To select a process many constraints have to be faced such as time, available data, investment, and economics. As all industries are mainly concerned with profits, out of all the constraints economics always remains the chief factor in selecting a process.

Selecting a process to be in batches or to be continuous is another critical task. Early chemical processing was normally done in batches and much continues to be done in that way. Batches can be measured correctly and are much suitable for small-scale production. However, the temperature and pressure control can be troublesome.

Furthermore time and resources lost in attaining the required conditions such as temperature and pressure, limits the use of batch processes. On the other hand, continuous processes require far smaller and less expensive equipment, have much less material in process, have less chance to ruin large quantities, have more uniform operating conditions, and give more uniform products than batch processes.

Continuous processes are very suitable for large-scale productions. However, these require concise control of flow-rates and conditions, which is impossible without high quality instrumentation. The reduction in plant cost per unit of production is often the major force in selecting a process to continuous or in batches.

1.5.2 Operation

Operation of a process plant is another important activity carried out by a chemical engineer. Chemical processing of a raw material into the desired product can only be achieved by operating the chemical plant. The quality and quantity of the product is directly dependent on the efficient operation of a plant. The smooth operation of a plant is a very difficult task and requires close attention of the engineer at all times. Many problems like temperature and pressure control, maintenance, and safety continue to arise during the plant operation. Experience and application of engineering principles is always needed to shoot out these problems. Negligence of a small problem can often lead to bigger, more complex problems and can cause unnecessary halts in production.

In order to be able to handle plant operation smoothly, a chemical engineer should start early to become familiar with the industrial equipment such as pumps, compressors, distillation columns, filter presses, and heat exchangers, etc. Almost every industry wants its engineers to be intimately familiar with every pipe & gauge of that industry. That is why every industry makes its new engineers spend their earlier time in tracing pipelines, an activity known as line tracing. The reason behind this practice is to intimately familiarize the engineers with all the pipelines, gauges, valves, and equipment of that industry so that whenever there is any fault in any section he should be able to identify the location and to work out its solution immediately.

In fact, troubleshooting is the core of plant operation. Successful plant operation of a chemical plant does not only depend upon the original strength of the materials of construction but also upon the affects of corrosion. Constant check-ups and inspection must be maintained to avoid corrosion. Mechanical failures are seldom experienced unless there has been previous corrosion or weakening by chemical attack.

Chemical manufacturing process can be divided into the following steps:

- Raw material

- Physical treatment steps

- Chemical treatment steps

- Recycle

- Product

1.5.3 Instrumentation and control

In commercial scale continuous operations the function of the workers and the supervising chemical engineer is to maintain the plant in proper running conditions. Maintaining required temperature, pressure, flow-rates, and other conditions is a very difficult task. Quality instrumentation is a must for maintaining these conditions. Instruments are the essential tools for modern processing. A chemical engineer must have the proper knowledge of the instruments involved for controlling and measuring process variables. Adequate ability to design control systems for processes and workout problems faced in controlling process operations is also essential.

Batch operation requires few instruments and hence more supervision on the part of the workers and the chemical engineer because the conditions and procedures differ from the start to the finish. Programmed instruments can solve even these problems if the expense can be justified. Instrument costs, once a trivial part of the total plant investment, have risen up to 25% of the total investment.

The use of computers has reduced this cost to some extent. Earlier plants used mechanical control instruments. These were replaced by pneumatic control systems, which were replaced by electronic control systems. Currently, plant-control is being done by DCS (Distributed Control System) using computers. DCS incorporates the use of electronic control devices but it utilizes computers to monitor and control process conditions. Even though many industries continue to use pneumatic and electronic control systems, however, the global trend is towards DCS because of its ability to handle plant operation more smoothly. Instrumentation has been forced into this position of eminence by the increase in continuous processes, increase in labour and supervision costs, the relative unreliability of human actions, and by the availability of many types of instruments at decreasing price and increasing reliability.

Instrument types include indicating instruments, recording instruments, and controlling instruments. Two types of instruments are generally used: analog and digital. Analog instruments such as pressure spring thermometer and Bourdon pressure gauges show results by mechanical movement of some type of device (e.g. spring or Bourdon tube), which is proportional to the quantity being measured. Digital devices generally utilize a transducer, a device that converts the measured signal into some other type of signal usually electronic or pneumatic. These devices also use electronic circuits, which convert the signal to readable numerical figures (digits), which are then displayed and may be recorded.

1.5.4 Chemical process economics

Economics is a vital part of an engineers work. Engineers are distinguished from scientists by their consciousness of costs and profits. Economics plays a vital role in the operation, design, and maintenance of every chemical plant. A good chemical engineer always gives economics top priority in his every effort. Every engineering decision involves cost considerations. Engineers must continue to keep up with the economic changes that may affect their products.

The primary objective of an engineer’s efforts must be to deliver safely the best product or the most efficient services at the lowest cost to the employer and the consumer. Since change is an outstanding characteristic of the chemical procedures, hence potential alteration of a process is of importance not only when the plant is being designed but continuously.

Decisions based on comparative facts must be exerted in most of the important discussions of a chemical engineer. Careful calculations using local parameters generally lead to clear and just decisions. Yield and conversions of the chemical process form the basis for the material and energy balances, which in turn are the foundation for the cost determination. Primary stress must be laid on these balances to keep the plant operation economical and profitable. Economic conditions and limitations are one of the most important factors of any plant design activity. The engineer must consider costs and profits constantly throughout his work. Cost per unit product always turns out to be the key issue for any business enterprise and an engineer should always work to keep it as economical as possible. It’s almost always better to sell many units of product at a low profit per unit than a few units at a high cost. An engineer must take into account the volume for production when determining costs and total profits for various types of designs, keeping in view customer needs and demands.

1.5.5 Marketing

Whenever a new product is under assessment, market evaluation for that product becomes essential. The job of a chemical engineer then leads to the market estimation of that product. The factors generally considered in the market evaluation are the present and future supply and demand, present and future uses, new uses, present buying habits, price range for products and by-products, character, location, and number of possible customers.

The marketing of a product does not only depend upon its advertisement but also on the quality of the product, its physical conditions, and it’s packing. Good firms rarely compromise on quality. Proper instrumentation, uniform plant conditions, good operators, and careful supervision lead to quality production. The physical conditions of the products have a very strong impact on the marketability. The physical conditions involve crystal structure, particle size and shape, colours, and moisture content.

Packaging of the product also plays an important role in the marketing of a product, especially the consumer products. However, packaging is often expensive. The most economical containers are refillable bulk ones such as tanks, tank-ships, and tank-cars. But these cannot be used for the consumer products since the container appearance is very important to the customers. For consumer products quality packing with attractive colours, designs, and materials has to be used.

Price of a product is the real concern for a customer. Prices should be maintained within the affordable range of a large number of people, since bigger markets lead to larger profits. To enhance the marketing of a product, an engineer should listen to the suggestions and the information brought to him by the salesperson, since he is the link between the company and the customer.

1.5.6 Safety

Chemical engineer also has role to perform in plant safety. Nothing is more dangerous to a plant than fire. Precautions to prevent and extinguish fire must be taken. Employees must be protected against toxic chemicals. Safety measures not only keep the employees out of danger but also save money and time by reducing accidents and any unnecessary halts in the production. Even though every human being is bound to err sometime, but at times he gets careless too. Sometimes too much familiarity with chemicals breeds’ carelessness, hence well-run plants have safety devices and continuing programmes for alerting those working with a given process to its hazards. Adequate safety and fire protection measures require expert guidance. There is considerable difference of opinion in rating certain chemicals as hazardous and their degree of toxicity. There are different standards for many toxic and harmful substances, however nowadays the governments decide these standards and are very severe on their implementation.

1.5.7 Construction of a plant

The construction (erection) of a plant is another activity carried out by a chemical engineer. The presence of the chemical engineer is essential during the erection of the plant in order to implement the design standards and interpret technical and design data whenever needed. The chemical engineer should always work closely with the construction team during the final stages of construction and purchasing designs. Construction of the plant may be started before the final design is completed.

During plant erection, the chemical engineer should visit the plant site to assist in the interpretation of plans and learn methods for improving future designs. The engineer should also be available during the initial start-up of the plant and early phases of operation. During the erection of a plant the engineer becomes intimately connected with the plant and hence learns the internal structure of the plant. The chemical engineer becomes involved with the installation of every pipe and gauge of the plant and this helps greatly while running the plant and eliminating problems faced during operation.

1.5.8 Research and development

Adequate and skilled research with patent protection is required for future profits. The chemical process industry has certain salient characteristics such as rapidly changing procedures, new processes, new raw materials and new markets. Research creates or utilizes these changes. Without forward-looking investigation or research a company would lag behind in the competitive progress of its industry. Development is the adaptation of research ideas to the realities of production and industry. The progress of industry opens up new markets for even the most fundamental, established products.

1.5.9 Management

Due to the dramatic rise in productivity and the recent technological advances in the chemical process industries, this sector has become very complex. The complexity of this industry has made it very difficult for business graduates, who do not have any knowledge of chemicals and equipments, to handle it. Now the chemical companies like to have chemical engineers as their mangers.

Management is a very important aspect of plant operation. Handling the personnel, most importantly the workers is one of the most difficult jobs but a chemical engineer is always in contact with his workers and most of the time has to rely on them. Dealing the personnel is often called Human engineering. The job of a chemical engineer is to control and run machines effectively and efficiently, and there is no machine better or more complex than the human being. Controlling this machine is perhaps the most difficult task a man has to perform. But as an engineer is in constant interaction with his workers and personnel so he has to perform it effectively. Hence, a good engineer must be a good manager as well and has to listen to their opinions and understand their attitude. Keeping the personnel in high spirits and motivate them is very important.

1.5.10 Process system engineering

Many engineers are realizing that they can no longer think of a process plant as a collection of individually designed operations and processes. It is becoming increasingly evident that each separate unit of a plant influences all others in subtle ways. It is also true that the plant is a part of an ecological system extending well beyond its boundaries. The general availability of the computers has made it possible to study the dynamic behaviour of plants as well as their static or steady state behaviour. Such intense studies have shown new possibilities for plant operation not previously conceived. The next generation of engineers will be studying, analyzing, and optimizing such interacting and complex systems. This is a major improvement over envisioning design as involving simple, non-interacting, static systems that use only operations and unit processes.

1.5.11 Environment

The role of a chemical engineer in controlling pollution and waste generation can hardly be over emphasized. Chemical engineers are concentrating in the area of environmental engineering to develop new methods and techniques to treat wastes generated by the process industries, minimize waste generation and develop renewable sources of material and energy.

These engineers are working towards developing sustainable and renewable technologies. Their role in the earlier design phases of process industries has now led to new practically fume-less chemical plants.

1.5.12 Design

Design of a chemical process plant is the one activity unique to chemical engineering and is the strongest reason justifying the existence of chemical engineering as a distinct branch of engineering. Design is a creative activity and is perhaps the most satisfying and rewarding activities undertaken by a chemical engineer. It is the synthesis, the putting together of ideas to achieve a desired purpose. It is perhaps the most important task undertaken by a chemical engineer. The design does not exist at the commencement of a project. The designer starts with a specific objective in mind, a need, and by developing and evaluating possible designs, arrives at what he considers best way of achieving that objective.

A principle responsibility of the chemical engineer is the design, construction, and operation of chemical plants. In this modern world age of industrial competition, a successful chemical engineer needs more than a knowledge and understanding of the fundamentals sciences and related engineering subjects such as thermodynamics, reaction kinetics, and computer technology. The engineer must also have the ability to apply this knowledge to practical situations for the purpose of accomplishing something that will be beneficial to society. However, in making these applications, the chemical engineer must recognize the economic implications, which are involved and proceed accordingly.

Plant design includes all engineering aspects involved in the development of either a new, modified, or expanded industrial plant. In this development the chemical engineer makes economic evaluations of new processes, designs individual pieces of equipment for the proposed new venture, or develops a plant layout for coordination of the overall operation. Because of these design duties, the chemical engineer is many times referred to as design engineer. On the other hand, a chemical engineer specializing in the economic aspects of the design is often referred to as cost engineer. Chemical engineering design of new chemical plants and the expansion or revision of the existing ones require the use of engineering principles and theories combined with a practical realization of the limits imposed by individual conditions.

1.6 “Ten greatest achievements” of chemical engineering

1.6.1 The atom

Biology, medicine, metallurgy, and power generation have all been transformed by the capability to split the atom and isolate isotopes. Chemical engineers played a significant role in achieving both of these results. Early on chemical facilities were used in warfare, which ultimately resulted in the production of the atomic bomb. Today, these technologies have found uses in more peaceful applications. Medical doctors now use isotopes to monitor bodily functions; quickly identifying clogged arteries and veins. Similarly, biologists gain invaluable insight into the basic mechanisms of life and archaeologists can accurately date their historical findings.

1.6.2 The plastic age

The start of 19th Century witnessed tremendous achievements in polymer chemistry. However, it required the vision of chemical engineers during the 20th century to make bulk produced polymers a viable economic reality. When a plastic called Bakelite was introduced in 1908 it heralded the dawn of the “Plastic Age” and quickly found uses in electric insulation, plugs & sockets, clock bases, iron cooking handles and fashionable jewelry. Now, plastic has become so ubiquitous that we hardly notice it exists. Yet, nearly all aspects of modern life are positively and deeply impacted by plastic.

1.6.3 The human reactor

Chemical engineers have been engaged in detailed study of complex chemical processes by breaking them up into smaller called-“unit operations.” Such operations might comprise of heat exchangers, filters, chemical reactors and the like. Subsequently, this concept has also been applied to the human body. The implications of such analysis have aided to improve clinical care, suggest improvements in diagnostic and therapeutic devices and led to mechanical wonders such as artificial organs. Medical doctors and chemical engineers continue to work in tandem to help us live longer fuller lives.

1.6.4 Wonder drugs for the masses

Chemical engineers have been adept to take small quantities of antibiotics developed by distinguished researchers such as Sir Arthur Fleming (who discovered penicillin in 1929) and increase their yields several thousand times through mutation and special brewing techniques. Today’s low price, high volume, drugs owe their existence to the work of chemical engineers. This ability to bring once scarce materials to all members of society through industrial creativity is a defining characteristic of chemical engineering.

1.6.5 Synthetic fibers

Right from blankets and clothes to beds and pillows, synthetic fibers keep us warm, cozy and provide a good night’s rest. Synthetic fibers also help reduce the strain on natural sources of cotton and wool, and can be tailored to specific applications.

1.6.6 Liquefied air

When ambient air is cooled to very low temperatures (about 320 deg F below zero) it condenses into a liquid. Chemical engineers are then capable to separate out the different components of air. The purified nitrogen can be used to recover petroleum, freeze food, produce semiconductors, or prevent unwanted reactions while oxygen is used to make steel, smelt copper, weld metals together and support the lives of patients in hospitals.

1.6.7 The environment

Chemical engineers furnish economical solutions to clean up yesterday’s waste and prevent tomorrow’s pollution. Catalytic converters, reformulated gasoline and smoke stack scrubbers all help keep the world clean. Additionally, chemical engineers help reduce the strain on natural materials through synthetic replacements, more efficient processing and new recycling technologies.

1.6.8 Food

Plants require large quantities of nitrogen, potassium and phosphorus to grow in abundance. Chemical fertilizers can help provide these nutrients to crops, which in turn provide us with a bountiful and balanced diet. Fertilizers are especially important in certain regions of our earth where food can sometimes be scarce. Advances in biotechnology also offer the potential to further increase worldwide food production. Finally, chemical engineers are at the forefront of food processing where they help create better tasting and most nutritious foods.

1.6.9 Petrochemicals

Chemical engineers have assisted to develop processes like catalytic cracking to break down the complex organic molecules found in crude oil into much simpler components. These building blocks are then separated and recombined to form many useful products including: gasoline, lubricating oils, plastics, synthetic rubber and synthetic fibers. Petroleum processing is therefore recognized as an enabling technology, without which, much of modern life would cease to function.

1.6.10 Synthetic rubber

Chemical engineers play a prominent role in developing today’s synthetic rubber industry. During World War II, synthetic rubber capacity suddenly became of great importance. This was because modern society runs on rubber. Tires, gaskets, hoses, and conveyor belts (not to mention running shoes) are all made of rubber. Whether you drive, bike, roller-blade, or run; odds are all are running on rubber.

1.7 Chemical engineering “today & tomorrow”

The ‘Big Four’ engineering fields comprises of civil, mechanical, electrical, and chemical engineers. Of these, chemical engineers are numerically the smallest group. However, this relatively small group holds a very important position in many industries and chemical engineers are, on average, the highest paid of the ‘Big Four’. Also, numerous chemical engineers have found their way into upper management.

Chemical Engineering offers a career, which enables professionals to contribute in a meaningful way to society. Many young engineers are assigned projects, which involve environmental, health, and safety issues associated with chemical processing. The chemical processing industry is committed to producing high value products which are a benefit to society, and which have minimal environmental, health and safety consequences.

2.1 Introduction

Stoichiometry is the calculation of quantities in chemical equations. In a given chemical reaction, stoichiometry tells us what quantity of each reactant we need in order to get enough of our desired product. Because of its real-life applications in chemical engineering as well as research, stoichiometry is one of the most important and fundamental topics in chemistry.

The most simple stoichiometric problem will present you with a certain amount of a reactant and then ask how much of a product can be formed. Here is a generic chemical equation:

2 X + 2Y —> 3Z

Here is a typically-worded problem: Given 20.0 grams of X and sufficient Y, how many grams of Z can be produced?

It is required to get familiar with the use molar ratios, molar masses, balancing and interpreting equations along with conversions between grams and moles.

The meaning of a chemical equation

Chemical equations give information in three major areas:

- They tell us what substances are reacting (those being used up) and what substances are products (those being made)

- The coefficients of a balanced equation tell us in what ratio the substances react or are produced

- The relative amounts of all substances involved in the reaction

2.2 Understanding chemical formulas and equations

One of the most ‘mysterious’ parts of chemical engineering is the knowledge of writing chemical formulas and equations. It is almost magical that a chemical engineer hears the name of a substance or chemical reaction and can instantly translate this into a chemical formula or equation. The depth of knowledge possessed by a chemical engineer is beyond the scope of this course and manual. But we shall definitely make a beginning at unraveling the mystery of this section of chemical engineering.

A chemical reaction takes place between two or more chemicals. But it is not so in every case. For example if zinc and iron are placed together nothing happens. Even when heated to high temperatures the two metals merely fuse and don’t react chemically. It is therefore important to understand how and why chemical reactions take place.

In their quest to make gold out of lead yester-year alchemists discovered that a certain type of compound (or element) reacted with only another specific type of compound (or element). Gold was considered pure because it has virtually no reaction with any compound under standard conditions. On the other hand even if a tiny bit of sodium comes into contact with water there is a vigorous and often explosive reaction. One conclusion that was arrived at was that the reaction took place even when extremely minute quantities of two reactive substances came into contact with each other. In other words the reaction was independent of the quantity of reactants. The term used today to describe the smallest part of an element (pure substance) is the ‘Atom’.

The first reference to this particle is found in the Vedic Period in India (around 1500 BC) where the learned sage Maharshi Kanad propounded the idea of ‘Anu’ or the smallest particle of matter that can exist independently.

The term atom was first used by the Greek philosopher Democritus and in Greek it means ‘indivisible’. Later the works of John Dalton (1808), William Crookes (1878), JJ Thomson (1879) further defined the structure of the atom.

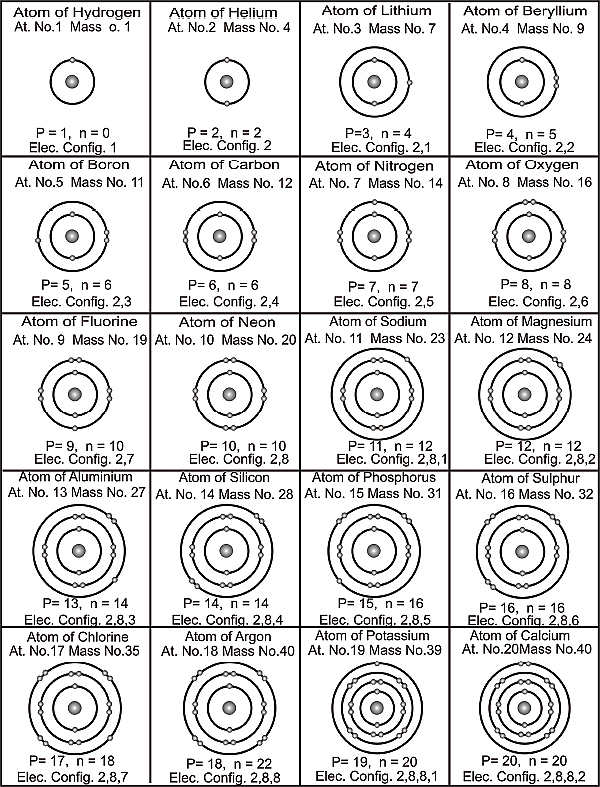

But it was the work of Rutherford and Neils Bohr that gave rise to the structure of the atom that is in use even today. Although science has since discovered ‘sub-atomic’ particles the principles of the Rutherford-Bohr model have remained unchanged.

In chemistry it is believed that a chemical reaction takes place at the atomic level. To understand a chemical reaction and to classify elements based on their reactivity a basic understanding of the structure of the atom is necessary.

2.2.1 The structure of the atom

Rutherford conducted exhaustive experiments to determine the structure of the atom and was the first person to actually propose a model of an atom. He based his research on William Crookes’ discovery of charged particles that were called electrons and on JJ Thomson’s model of an atom. Since he found that both theories proposed by his predecessors were flawed he proposed his own model of an atom based on the experiments he conducted. The salient feature of his model was the theory that an atom consisted of a nucleus of positively charged protons with negatively charged electrons revolving around it. Hence an atom was thought of having charged particles but remained electrically neutral.

In 1913 Neils Bohr, a Danish physicist discovered that the laws of mechanics and electrodynamics could not be used to substantiate Rutherford’s theory and hence was flawed. He then suggested that electrons revolving around the nucleus were doing so in fixed orbits (also known as shells or energy levels). It is this basic information that revolutionized the way a chemical reaction was viewed.

The model of an atom proposed by Bohr details many aspects which are beyond the scope of our study and the relevant ones are used to describe a chemical reaction.

The basic structure of an atom was thought to be that of a central nucleus consisting of positively charged particles called protons surrounded by negatively charged particles that revolved at very high speed around the nucleus and were known as electrons. It was calculated that the mass of an electron was negligible when compared to the mass of an atom. It was further believed that to sustain stability and remain stationery a proton had to have higher mass. Since an atom was always electrically neutral the number of protons always equaled the number of electrons in an atom. But this theory was soon dismissed because when the system of a.m.u. (atomic mass unit) was used to calculate the mass of an atom the results didn’t add up. It was experimentally determined that the mass of the protons was far less than the total weight of the atom. The difference in mass was explained by proposing that within the nucleus there had to be present particles that had the same mass as that of protons but had no electric charge. These particles were called Neutrons.

To begin understanding a chemical reaction we need to know the three most important parts of the atom that have just been referred to along with their properties, symbols and electric charge. The table below illustrates all of these.

| Sub-atomic particle | Definition | Symbol |

| Electron | A particle that revolves around a central nucleus; has negligible mass; has a negative charge of 1. | e |

| Proton | A particle making up a nucleus; has a mass of one hydrogen atom; has a positive charge of 1. | p |

| Neutron | A particle making up a nucleus; has a mass almost equal to one hydrogen atom; has no charge | n |

The arrangement of these three sub-atomic particles gives rise to unique elements. It can therefore be said that all elements consist of these particles but acquire their uniqueness from the arrangements of these particles in their respective atoms.

The number of protons in the nucleus of an atom is called the Atomic Number and is denoted by the letter ‘Z’. Based on the fact that an atom is electrically neutral it follows that the number of protons is equal to the number of electrons present in the atom.

The number of protons and neutrons present in the nucleus of an atom are called the Mass Number and is denoted by the letter ‘A’.

Based on the above information it follows that

- Z = p = e

- A = n + p

The arrangement of electrons revolving around a nucleus is of prime importance and is based on the theory put forward by Bohr and Bury. The salient features of this theory are detailed below.

- The maximum number of electrons that can be present in any shell of an atom is given by the formula 2n2 where n = the number of the shell (counted from its proximity to the nucleus. E.g. the shell closest to the nucleus is numbered 1, the next one is 2 and so on).

- The outermost shell of an atom cannot have more than 8 electrons and the last but one shell cannot have more than 18 electrons

- Each shell or orbit of an electron need not wait to complete the maximum number of electrons permissible by the formula 2n2 before another shell is formed. It is observed that a new shell is formed as soon as the outermost shell attains 8 electrons.

- An atom stops reacting chemically once the outermost shell acquires 8 electrons or it has only one shell containing 2 electrons (as in the case of Helium).

Structure of an atom

Based on the information we can now tabulate the number of electrons in each shell or orbit for a given element. The table below lists some of the elements commonly used.

| Element | No. of neutrons n = A – Z | No. of protons Z = p | No. of electrons Z = e | Electronic configuration |

| Hydrogen 1H1 | 1 – 1 = 0 | 1 | 1 | 1, |

| Helium 2He4 | 4 – 2 =2 | 2 | 2 | 2, |

| Carbon 6C12 | 12 – 6 = 6 | 6 | 6 | 2,4 |

| Nitrogen 7N14 | 14 – 7 = 7 | 7 | 7 | 2,5 |

| Oxygen 8O16 | 16 – 8 = 8 | 8 | 8 | 2,6 |

| Neon 10Ne20 | 20 – 10 = 10 | 10 | 10 | 2,8 |

| Sodium 11Na23 | 23 – 11 = 12 | 11 | 11 | 2,8,1 |

| Aluminum 13Al27 | 27 – 13 = 14 | 13 | 13 | 2,8,3 |

| Sulphur 16S32 | 32 – 16 = 16 | 16 | 16 | 2,8,6 |

| Chlorine 17Cl35 | 35 – 17 = 18 | 17 | 17 | 2,8,7 |

| Calcium 20Ca40 | 40 – 20 = 20 | 20 | 20 | 2,8,8,2 |

From the above information an important term in Chemistry is coined: Valency. This is a number denoting the number of electrons present in the last shell of a neutral atom. It is believed that when two atoms of different elements undergo any chemical change, the valency electrons are transferred from one to another.

A chemical bond is described as the force that actually holds the atoms together within a molecule of substances that have undergone a chemical reaction. So why do elements combine or undergo a chemical reaction?

It has long been known that ‘noble’ gases like Neon do not react chemically. A common feature among all noble gases is that they have either 2 or 8 electrons in their last shell or orbit. Similarly all other elements have between 1 and 7 electrons in their last shell. In 1918 Kossel and Lewis used these assumptions and independently came to the conclusion that a duplet (two electrons in the last shell) or an octet (eight electrons in the last shell), were the most stable configuration for atoms. They further stated in this configuration an atom will be in a minimum state of energy.

Based on this assumption it follows that each atom tries to attain a configuration of duplet or octet in its last shell. Those with one electron in the last shell find it easier to get rid of that electron and those with seven in their last shell find it easier to acquire a single electron. This giving and taking of electrons is what determines whether two elements combine chemically or not. It can further be concluded that all atoms aspire to be ‘noble’ or attain chemical stability by trying to acquire an electronic configuration similar to that of a noble gas. To understand this further the Figure 2.2 shows the electronic configuration of the first 20 elements appearing in the Periodic Table.

Electronic configuration of atoms

When an atom gives up an electron it acquires a positive charge (+) and when it acquires an electron it becomes negatively charged (–). This can be illustrated with a simple example.

Na – 1e– = Na+

Cl + 1e– = Cl–

In both the examples a stable configuration of 8 electrons (in the last orbit) is obtained. This is the reason why Sodium (Na) and Chlorine (Cl) react vigorously to produce Sodium Chloride (NaCl). The transfer of electrons from one atom to another gives rise to another important term often used in chemistry: Chemical Bond.

In the periodic table the elements are listed in a prescribed format. Besides the obvious grouping of atomic weights the other major criterion used is the number of electrons in the last shell. This is why Lithium, Sodium, and Potassium, appear in the same column (each has one electron in its last orbit).

It is now evident why elements react and why Sodium and Potassium will not react chemically as both of them want to get rid of an electron to obtain stability. It can therefore be concluded that a chemical reaction will most likely take place between an element that wants to get rid of an electron and one that wants to receive an electron. In the example stated above Sodium wants to give up an electron and Chlorine wants to receive an electron. This is the reason that when these two elements come into contact with each other they react vigorously.

2.3 Balancing chemical equations

The first step to balancing equations is to write the chemical components in the form that they exist in nature symbolically. A balanced chemical equation follows the Law of Conservation of Mass and hence to deem an equation balanced the amount of each element reacting must give rise to an equal amount of the same element in the new formed compound. This must be done before the equation can be used in a chemically meaningful way.

A balanced equation has equal numbers of each type of atom on each side of the equation. The Law of Conservation of Mass is the rationale for balancing a chemical equation. Here is the example equation for this lesson:

H2 + O2 —> H2O

It is an unbalanced equation (sometimes also called a skeleton equation). This means that there are unequal numbers on at least one atom on each side of the arrow.

In the example equation, there are two atoms of hydrogen on each side, but there are two atoms of oxygen on the left side and only one on the right side.

A balanced equation must have equal numbers of each type of atom on both sides of the arrow.

An equation is balanced by changing coefficients in a somewhat trial-and-error fashion. It is important to note that only the coefficients can be changed, never, a subscript. The coefficient times the subscript gives the total number of atoms.

Sample problem

As a sample exercise, consider the equation given below. To determine whether this reaction is balanced you must first determine how many atoms of each type are on the reactant side (left-hand side) of the equation and how many atoms of each type are on the product side (right-hand side).

In this example, you have two nitrogen atoms and two hydrogen atoms on the reactant side but only one nitrogen atom and three hydrogen atoms on the product side. For balancing the equation, we are not concerned what molecules these atoms are in, just the number of atoms of each type.

To balance this reaction, it is best to choose one kind of atom to balance initially. Let’s choose nitrogen in this case. To obtain the same number of nitrogen atoms on the product side as on the reactant side requires multiplying the number of product NH3 molecules by two to give:

As you can see above, once we know what the molecules are (N2, H2, and NH3 in this case) we cannot change them (only how many of them there are). The nitrogen atoms are now balanced, but there are six atoms of hydrogen on the product side and only two of them on the reactant side. The next step requires multiplying the number of hydrogen molecules by three to give:

As a final step, make sure to go back and check whether you indeed have the same number of each type of atom on the reactant side as on the product side. In this example, we have two nitrogen atoms and six hydrogen atoms on both sides of the equation. We now have a balanced chemical equation for this reaction

2.4 Chemical periodicity

Lothar Meyer and Dimitri Mendeleev both discovered meaningful patterns of properties among the approximately 63 elements known in 1865.Both listed the elements in order of increasing atomic weight and saw that the properties repeat, a phenomenon called periodicity.

Mendeleev offered some bold, but correct, proposals about places in the scheme that seemed inconsistent and so is generally given credit for the development of the periodic table. Mendeleev’s periodic table left holes where a known element would not properly fit. The classic example is germanium, which was unknown. There was no element in the group that fits the properties of the element below silicon and to the left of arsenic. Mendeleev left that position empty and proposed that the element that belonged there, which he called eka-silicon, was simply yet to be discovered. Within a few years, it was found and its properties matched Mendeleev’s predictions almost perfectly.

At the time of Mendeleev, scientists did not know about the structure of the atom and about subatomic particles and so they did know about atomic numbers. It is a universal fact that the atomic number is the number of protons in the nucleus and therefore it is the charge of the nucleus. The periodic table is actually arranged in order of increasing atomic number, not increasing atomic weight.

A periodic table is included in Appendix -A for reference.

2.4.1 Ionization energy

The ionization energy, IE, is the energy required to remove the outermost electron from a gaseous atom or ion. The first ionization energy, IE1, is the energy for the removal of an electron from a neutral, gaseous atom: M (g) M(g)+ + e. Metallic atoms tend to lose enough electrons to gain the electron configuration of the proceeding noble gas.

There are periodic trends in the ionization energies, also tied to the effective nuclear charge. As the effective nuclear charge increases, it requires more energy to remove the outermost electron from an atom. Consequently, ionization energy is also related to the atomic radius, with ionization energy increasing as atomic radius decreases. Therefore, the first ionization energy increases from left to right in a period and from bottom to top in a group.

2.4.2 Electron affinities

Electron affinity, E, is the energy change of the reaction of adding an electron to a gaseous atom or ion.: M(g) + e M(g)–. These reactions tend to be exothermic and so the values of E are generally negative.

In general, electron affinity tends to decrease (become more negative) from left to right in a period. Going down a group, there is little change in the electron affinities. Negative electron affinity means that the atom gains electrons easily.

2.4.3 Sizes of ions

Recall that atoms increase in size going from right-to left on a period and top-to-bottom in a group.

Cations are smaller than their parent atom because the effective nuclear charge on the outermost electrons is greater in the cation. The number of protons remains the same but the number of screening electrons decreases.

Anions are larger than their parent atoms because the effective nuclear charge on the outermost electrons in smaller in the anion. The number of protons remains the same but the number of screening electrons increases.

Isoelectronic series are groups of atoms and ions which have the same electronic configuration. Within isoelectronic series, the more positive the charge, the smaller the species and the more negative the charge, the larger the species.

Having understood the fundamental way in which atoms react we will now look at how a chemical engineer uses this information to determine reactions, quantities and product values in the field.

2.5 Molecular weight

The molecular weight of a substance is the weight in atomic mass units of all the atoms in a given formula.

An atomic mass unit is defined as 1/12 the weight of the carbon-12 isotope. The old symbol was amu, while the most correct symbol is u (a lower case letter u).

Carbon-12 is defined as weighing exactly 12 amu. This is the starting point for how much an atom weighs.

The molecular weight of a substance is needed tell us how many grams are in one mole of that substance.

The mole is the standard method in chemistry for communicating how much of a substance is present.

The four steps used to calculate a substance’s molecular weight are mentioned below:

Step One: Determine how many atoms of each different element are in the formula.

Step Two: Look up the atomic weight of each element in a periodic table.

Step Three: Multiply step one times step two for each element.

Step Four: Add the results of step three together and round off as necessary.

2.6 The mole and molar mass

The mole is the standard method in chemistry for communicating how much of a substance is present.

Here is how the International Union of Pure and Applied Chemistry (IUPAC) defines “mole:”

The mole is the amount of substance of a system which contains as many elementary entities as there are atoms in 0.012 kilogram of carbon-12. When the mole is used, the elementary entities must be specified and may be atoms, molecules, ions, electrons, other particles, or specified groups of such particles.

One mole contains as many entities as there are in 12 grams of carbon-12 (or 0.012 kilogram). There are 6.022 x 1023 atoms in 12 grams of carbon-12. This number has been very carefully measured in a number of ways over many decades.

Avogadro’s number

6.022 x 1023 is so important that it has a name. It is called Avogadro’s number and has the symbol N. It is so named in honor of Amedeo Avogadro, an Italian chemist, who, in 1811, made a critical contribution (recognized only in 1860 after his death) which helped greatly with the measurement of atomic weights.

2.7 Percent composition

Percent composition is the percent by mass of each element present in a compound.

Consider Water (H2O) for an example.

One mole of water is 18.0152 grams.

In that compound, there are two moles of H atoms and 2 x 1.008 = 2.016 grams. That’s how many grams of hydrogen are present in one mole of water.

There is also one mole of oxygen atoms weighing 16.00 grams in the mole of water.

To get the percentage of hydrogen, divide the 2.016 by 18.015 and multiply by 100, giving 11.19%.

For oxygen it is 16.00 ÷ 18.015 = 88.81%.

2.7.1 Molar ratios